The Battle of the Big Models has dominated 2023, and now, all major tech giants are setting their sights on AI wearables, particularly smart glasses. According to The Information, Meta, Google, Microsoft, Amazon, and Apple are all gearing up to integrate AI Big Models into wearables equipped with cameras like smart glasses. They see smart glasses as an ideal platform for AI big models due to their ability to handle various types of information such as sound, pictures, and videos.

Sources close to the matter have revealed that leading AI startup OpenAI is currently working on integrating its "GPT-4 with Vision" object recognition software into the products of social company Snap. This integration may offer new functionality for Snap's smart glasses, Spectacles. Additionally, Meta recently showcased the impact of integrating AI functionality into Ray-Ban smart glasses. These smart glasses can describe what the user sees via an AI voice assistant and provide recommendations such as pairing shirts with pants. They also feature new capabilities like translating Spanish-language newspapers into English.

Amazon's Alexa AI assistant team is also exploring a new type of AI device with a sense-setting feature. Moreover, like many cell phone manufacturers, Google has begun integrating AI features into cell phones. Additionally, in June of this year, Apple unveiled the Apple Vision Pro headset, set to be sold next year. However, according to speculation from The Information, the device may not initially feature multimodal AI functions. With the advent of a new era in mobile terminals, how will tech giants such as Apple, Microsoft, Google, Meta, and others strategize for this new battleground? How will they leverage their AI capabilities in major hardware offerings? Which new AI hardware will emerge as the optimal carrier for AI big models? These questions highlight the onset of an AI hardware innovation war, as seen in the latest breaking news.

01 Google has integrated its mobile AI assistant Pixie into smart glasses software, enabling the provision of search services

In the recently released Gemini, a powerful AI model, a video demonstrated the AI's ability to guess the name of a movie based on an actor's actions. Additionally, it showcased its capabilities in map navigation, problem-solving, and more.

While acknowledging that the video content may have undergone editing, it still conveys Google's fundamental aim: to develop an ever-present AI capable of providing immediate feedback or assistance based on user activities. A source familiar with Google's consumer hardware strategy noted that it might take several years before Google achieves this goal, as implementing environment-based computing would require significant power resources. Presently, Google is revamping the operating system of its Pixel phones to integrate smaller-scale Gemini models, enhancing the capabilities of Pixie, its mobile AI assistant.

Given Google's extensive expertise in search technology, The Information believes that an AI device capable of learning and predicting users' needs based on environmental data aligns well with Google's strengths. Despite the setback of Google Glass's failure a decade ago, Google has been proactive in encouraging Android phone manufacturers to utilize their devices' cameras to capture and analyze images via a cloud-based system, leading to the development of applications like "Google Lens" for image search.

Insiders familiar with Google's strategy revealed that the company has discontinued the development of glasses-type devices but continues to focus on software development for such devices. Google intends to license its image search software to hardware manufacturers, mirroring its approach to the development of the Android mobile operating system for companies like Samsung. This move underscores Google's commitment to playing a pivotal role in advancing its big AI models.

02 Microsoft is implementing AI software on its HoloLens, enabling the delivery of multimodal language through chatbots

This innovation allows users to leverage the HoloLens' front-facing camera to capture images, which are then analyzed by OpenAI-powered chatbots to provide object recognition and facilitate conversations for additional information.Amidst the surge in demand for large multimodal AI models, Microsoft's researchers and product teams have embarked on enhancing their voice assistant and exploring the integration of AI functions into compact devices. Patent applications and insider sources suggest that Microsoft's models could offer support for affordable smart glasses and other hardware. The company aims to deploy AI software on its AR headset HoloLens, enabling users to capture images with the headset's front-facing camera and send them to an OpenAI-powered chatbot for object recognition. This interaction allows users to engage in conversations with the chatbot to obtain further information.

03 Apple: Vision Pro may be loaded with big AI models when it's released

While Apple's Vision Pro boasts several new multimodal features, its progress in AI Big Model technology lags slightly behind that of its competitors. Presently, there are no indications that the Vision Pro will offer complex object recognition or other multimodal AI functionalities upon its launch. However, Apple has dedicated years to refining the Vision Pro's computer vision capabilities, enabling the device to swiftly recognize its surroundings. This includes the rapid identification of furniture and discerning whether the wearer is situated in the living room, kitchen, or bedroom. Apple may be developing large multimodal models capable of recognizing images and videos. Nevertheless, compared to eyewear forms being developed by other companies, the Vision Pro is bulky, heavy, and ill-suited for everyday outdoor use.Conversely, Apple purportedly halted the development of its AR glasses earlier this year to prioritize the sales of its headset. The timeline for resuming development work on the AR glasses remains uncertain.

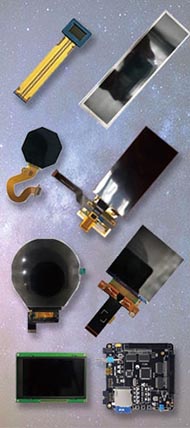

Related: Micro OLEDs for AR/MR Glasses

04.Meta:Has adapted Ray-Ban glasses, offers Al new features

Meta CTO Andrew Bosworth recently announced on Instagram that some users of Ray-Ban eyewear would soon have access to AI macromodels directly on their smart glasses. Meta views Ray-Ban eyewear as a precursor to AR eyewear, blending digital images with the real world. Initially, Meta planned to launch AR glasses in the coming years, but faced challenges, including reports of struggling user adoption and setbacks in next-generation display development. However, the introduction of a large multimodal AI model has revitalized Bosworth and his team, offering the potential for a range of new AI features on smart glasses in the near term.

05.AI wearables + camera, or the best hardware carrier for big models

This isn't the inaugural attempt by Silicon Valley behemoths to craft wearable devices equipped with cameras. Previously, Google, Microsoft, and other tech titans delved into the development of AR headsets. Initially, they aspired to project a digital screen onto the headset's semi-transparent display, gradually offering guidance to facilitate task completion for users. However, due to the intricate nature of optical design, many of these products were met with lackluster reception.

OpenAI has rolled out a multimodal big language model, enabling AI to discern users' visual focus and activities through visual recognition. Subsequently, it can furnish additional information pertaining to these actions and subjects. As the big language model becomes more lightweight, it becomes feasible for smaller devices to host the model, facilitating prompt feedback on user queries. Given the paramount importance of privacy and security, widespread acceptance of smart glasses and AI devices with embedded cameras may require some time to materialize.

The Information believes that smart glasses equipped with AI assistants have the potential to be as revolutionary as smartphones. These devices can serve as educational aids, guiding students through math problems or essay questions. Moreover, they can provide real-time environmental information to users, such as translating billboards or offering guidance on resolving car issues. Pablo Mendes, a former engineering manager at Apple and CEO of AI search company Objective, emphasizes the crucial role of big AI models in various technologies, including computers, cell phones, and other devices.

06.Conclusion: tech giants seek the best hardware carrier for AI big models

In the third wave of AI advancements catalyzed by ChatGPT, multimodal big models constitute the foundational infrastructure, while ChatGPT serves as the direct application, delineating clear roles. However, determining the optimal devices for maximizing ChatGPT's potential and serving as ideal carriers for big language models remains a priority for OpenAI, Microsoft, Google, and other tech giants. Recent revelations from The Information suggest that smart glasses with cameras have emerged as a significant focus for many industry leaders, while others are exploring the development of new wearable AI devices. Additionally, there's a trend towards adapting various AI models for mobile phones.

Indeed, it's not just the technology giants that share this perspective. In China, numerous AR glasses manufacturers are also eyeing this opportunity. According to an industrialist with over a decade of experience in the AI industry, robots and AR glasses could emerge as the primary beneficiaries of the AI big model wave.

However, amid these shared design concepts, the question remains: which company will ultimately develop the most efficient lightweight AI model? And who will succeed in creating the most functional smart glasses? We eagerly await the progress of the major tech giants as they strive to provide the answer.